Assess student learning, and you’ll see a consistent pattern: teaching is one thing, evidence is another. Learners can stay quiet, finish tasks, and still miss the core idea. If you wait until the end to find out, you end up reteaching under pressure.

I treat assessment as a way to make smarter decisions while learning is happening. Short quizzes, scenario prompts, reflections, and discussions tell me what learners understand, what they are unsure about, and what needs another explanation or practice round.

Online assessment improves this process. It is easier to deliver consistently, quicker to collect responses from everyone, and simpler to review at scale. You can track progress across lessons, spot common mistakes, and give timely feedback without piles of paper or manual scoring for every check.

In this guide, I’ll share 10 practical ways to assess student learning online, with examples of when to use each method and how to keep it manageable.

Key Student Assessment Terms and Definitions

Student assessment discussions often get confusing because people use assessment, test, and exam interchangeably. Clarifying the terms helps you choose the right method and set the right expectations with learners.

- An assessment is any method used to gather evidence of learning. This can include quizzes, projects, presentations, reflections, discussions, peer reviews, and more.

- A test is usually a structured set of questions designed to evaluate knowledge or skill. Many tests are graded, but not all have to be.

- An exam is often a higher-stakes evaluation used at the end of a unit or course. Exams tend to be more formal and carry more weight in final scores.

In online learning, you do not need to “test more.” You need clearer evidence of learning. That evidence should help you answer questions like:

- Do learners have enough background knowledge to start this topic?

- Are they following the lesson right now, or pretending to keep up?

- Can they apply what they learned in realistic situations?

- What feedback will help them improve next time?

These questions guide the assessment methods you choose.

The Best Times to Assess Student Learning

Assessment is not only about the format you choose. Timing matters just as much. In online learning, timing becomes critical because learners can struggle quietly without being noticed.

A balanced approach usually includes three moments.

Diagnostic assessment happens before instruction. It helps you understand readiness and prior knowledge. It prevents you from teaching content that is too advanced or too basic.

Formative assessment happens during instruction. It helps you monitor understanding as learners practice, make mistakes, and improve. It also tells you when to slow down, reteach, or move forward.

Summative assessment happens after instruction. It evaluates mastery at the end of a unit, module, or course. It is useful for grading and outcomes, but it should not be your only checkpoint.

A practical way to remember this is simple: assess early, assess often, assess smart. The methods below help you do exactly that.

10 Online Assessment Methods to Measure Learning

Not every assessment method measures the same thing. Some are great for quick checks. Some reveal deeper reasoning. Some show skill performance and application. The best courses mix online learning assessment tools based on goals and constraints.

1. Online Quizzes

Online quizzes for students are structured, question-based assessments delivered digitally. They can be automatically graded for objective question types and can include short answers that you review later.

Quizzes are versatile because they work at every stage of learning:

- A pre-quiz shows readiness before a unit

- A mid-lesson quiz checks understanding during instruction

- A post-quiz confirms mastery after instruction

- A practice quiz supports exam preparation and retention

Example in a Classroom Setting:

You are starting a unit on fractions. Before teaching, you give a short pre-quiz with five questions: identify equivalent fractions, compare two fractions, and a simple word problem. The results show that most students understand equivalence but struggle with comparing fractions.

You adjust the first lesson to focus on number lines and visual comparisons instead of spending time reteaching equivalence.

Example in Workplace Training:

You are training customer support agents on a new refund policy. A short quiz after the session checks whether they can identify edge cases and exceptions. You notice many agents missed questions about partial refunds. You add a follow-up micro-lesson with real examples, then run a second quiz two days later.

Actionable Tips for Better Quizzes:

- Mix recall and application. A few “what is” questions are fine, but add “what should you do next?” questions to reveal understanding.

- Use question pools when possible so learners get different versions.

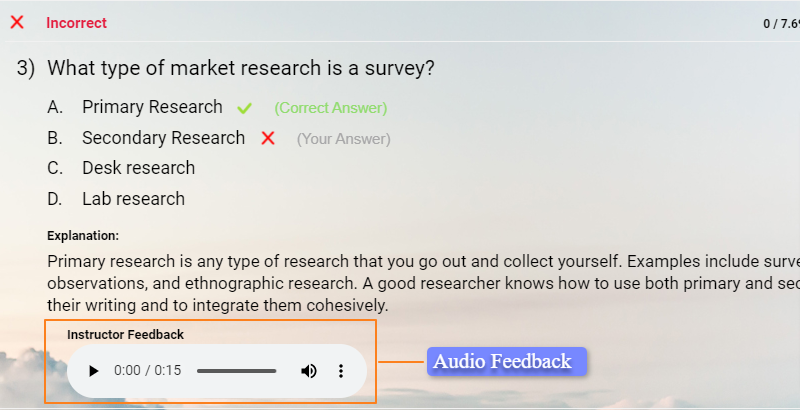

- Add brief feedback to key questions. Even one sentence helps learners learn from mistakes.

- Keep formative quizzes low-stakes. Learners perform better and participate more honestly when a quiz feels like practice.

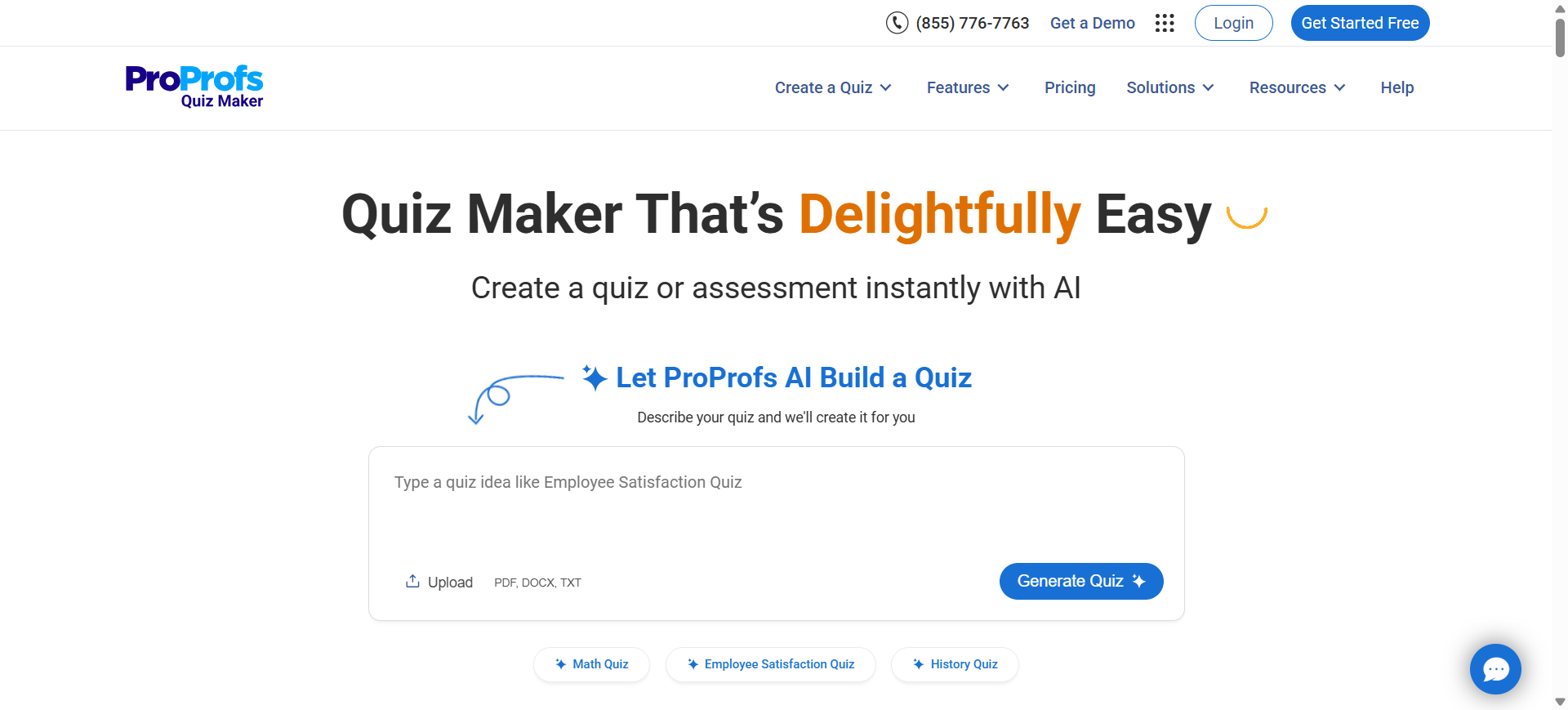

- Create quizzes with AI to save time and ensure a comprehensive assessment

Quizzes become especially powerful when you use them repeatedly over time. Practice testing supports retention, and frequent low-stakes quizzes reduce test anxiety.

2. Online Polls

Online polls are fast questions that help you check understanding in the moment. They can run during live sessions or be shared asynchronously as short prompts.

Polls are best for online formative assessment because they are low effort, quick to answer, and easy to interpret. They also let every learner respond, not just the most vocal ones.

Example During a Live Lesson:

You are teaching the difference between correlation and causation. Midway, you run a poll: “Which statement shows causation?” with four options. Half the class chooses the correlation example. Instead of moving forward, you pause and show a simple decision rule and a few more examples.

Example for Asynchronous Learning:

Learners complete a video lesson. At the end, you post a poll: “Which step is most confusing?” with options tied to the lesson. The responses tell you what to address in the next discussion thread or follow-up video.

Poll Prompts That Work Well:

- Confidence rating: “How confident are you with this topic?” (1–5)

- Best-example prompt: “Which scenario best shows this concept?”

- Sticking point check: “Which step felt hardest?”

- Quick takeaway: “Pick the best summary of today’s idea.”

The key with polls is to treat them as data, not decoration. When a poll reveals confusion, it should trigger action: reteaching, another example, or a slower pace.

3. Exit Tickets and Entrance Tickets

Image Source: bookwidgets.com

Entrance tickets check what learners remember before instruction begins. Exit tickets confirm understanding after instruction ends. In online learning, tickets can be done through a short form, an LMS prompt, a quiz link, or a structured chat response.

Tickets are small but extremely effective because they create a routine of learning checks. They also reduce the risk of learners drifting through sessions without engaging.

Example Exit Ticket for a Lesson:

At the end of a session on persuasive writing, students answer:

- “Write one strong claim for the topic we discussed.”

- “What part of writing a claim still feels confusing?”

The first response shows skill performance. The second shows what to teach next.

Example Entrance Ticket for a Training Program:

Before a session on cybersecurity, employees answer three questions: identify a phishing email, define multi-factor authentication, and answer a quick “true/false” on password reuse. The responses help the instructor avoid repeating what everyone already knows and focus on real gaps.

What Makes Tickets Work:

- Keep them short enough to finish in two minutes.

- Tie them to the lesson goal, not random trivia.

- Use one reflection prompt to uncover confusion that scores cannot show.

Exit tickets are also an easy way to build learner accountability without making the learning experience feel punitive.

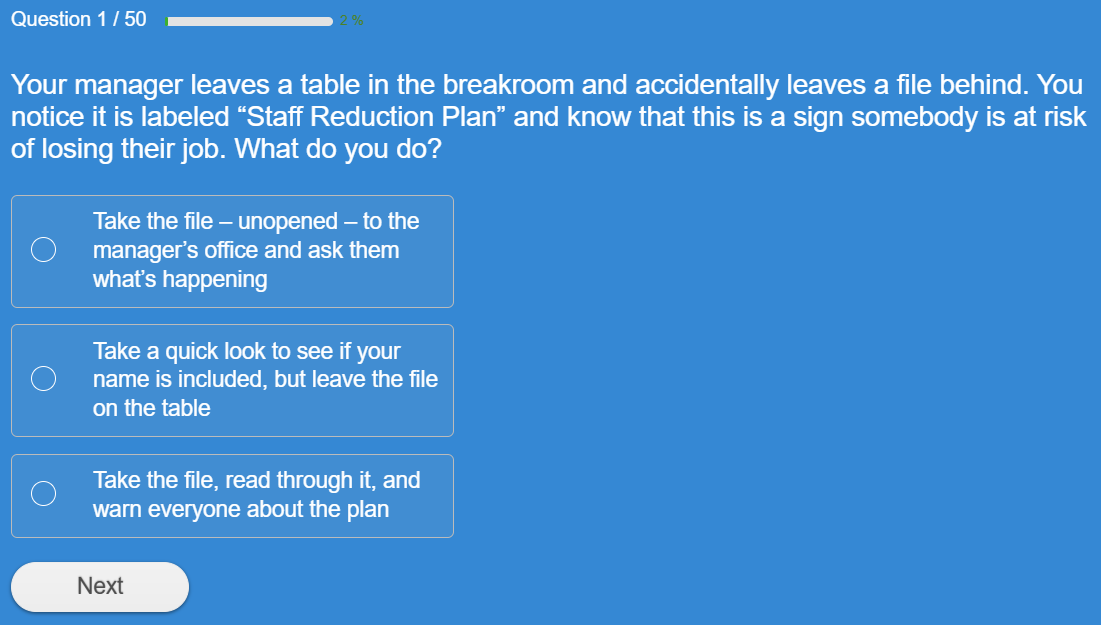

4. Scenario-Based Questions and Case Studies

Scenario-based assessment asks learners to apply knowledge in realistic situations. Instead of checking memorization, it checks whether learners can use the concept in context.

Scenarios are ideal for topics where decision-making matters. They are also one of the best ways to measure real learning beyond multiple-choice recall.

Example for Customer Service Training:

A learner is shown a scenario: “A customer says their package arrived damaged, but they want a replacement shipped overnight for free.” The question asks what to do next based on policy and tone guidelines. Learners choose an option and explain why.

Example for Healthcare Education:

A scenario describes symptoms and patient history. Students choose the most appropriate next step and justify the decision. This reveals whether they can apply knowledge rather than memorize facts.

Example for K–12:

Instead of asking “What is the main idea?” you provide a short story and ask, “Which sentence best supports the main idea, and why?” This turns a basic concept into application.

Scenario-based questions can be delivered in multiple ways:

- Multiple choice: “What should happen next?”

- Short answer: “Explain the decision in 3–5 sentences.”

- Branching scenarios: choices lead to different outcomes

To keep grading manageable, use a small rubric for explanations. Focus on accuracy and reasoning. You do not need a complex scoring system to get clear evidence.

5. Open-Ended Questions and Essays

Open-ended questions ask learners to explain, defend, compare, or reflect. Essays are a longer form of the same idea. This method reveals how learners think, not just what they remember.

Open-ended assessment is useful when you want to measure:

- Depth of understanding

- Ability to reason and use evidence

- Clarity of communication

- Critical thinking

Example Open-Ended Prompt:

“Explain the concept of opportunity cost using a real decision you made recently.”

This makes copying harder and reveals whether learners truly understand the idea.

Example Compare-and-Justify Prompt:

“Compare two approaches and recommend one for this scenario. Use two reasons.”

To avoid grading overload, start with short responses more often and reserve full essays for major milestones. A single paragraph can reveal a lot if the prompt is focused.

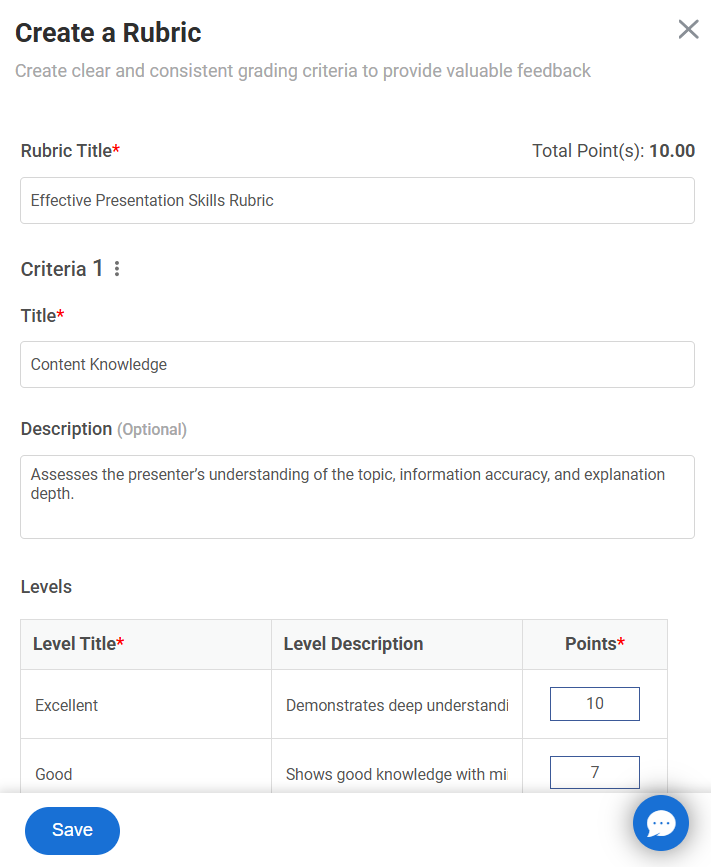

Rubrics are essential here. A simple rubric often includes:

- Accuracy

- Reasoning

- Evidence or examples

- Clarity

Here’s an example:

6. Student-Created Videos

Student-created videos ask learners to explain a concept, teach it back, or demonstrate a skill. This format makes thinking visible and adds authenticity to online learning.

Video assessment is especially useful when learners need to show a process, demonstrate a skill, or practice communication.

Example for Language Learning:

Learners record a two-minute video responding to a prompt using target vocabulary and grammar structures.

Example for Science:

Students demonstrate a simple experiment and explain the result and method.

Example for Workplace Training:

Sales reps record a mock call where they handle a pricing objection. This is far more useful than a quiz alone because you hear tone, structure, and clarity.

Strong video prompts are specific:

- A two-minute explanation with one example

- A walkthrough of how a problem was solved

- A role-play response to a realistic scenario

To keep grading fair and learner-friendly, grade content over production quality and say so clearly. A focused rubric that looks at correctness, clarity, reasoning, and relevance usually works well.

Watch: How to Create a Video Interview Quiz

7. Live Oral Checks and Online Interviews

Oral checks are live assessments via video call, either one-on-one or in small groups. They help you assess communication, reasoning, and authenticity in a natural way.

This method works well when explanation and speaking skills matter. It is also useful for confirming understanding when results look inconsistent across assessments.

Example:

A student scores high on quizzes but struggles with projects. A short oral check helps you understand whether they truly grasp the content or whether the quiz scores were inflated by guessing or outside help.

Oral checks do not need to be long. Five minutes can provide strong evidence. A simple structure works well:

- One core question that requires explanation

- One follow-up question that changes the conditions slightly

A basic rubric focused on accuracy, clarity, and reasoning keeps evaluation consistent.

8. Peer Evaluation and Discussion-Based Assessment

Peer evaluation asks learners to review each other’s work using clear criteria. Discussion-based assessment evaluates learning through structured contributions in forums, threads, or guided conversations.

These methods are valuable because learners often learn deeply by explaining ideas and giving feedback. They also reduce isolation, which is a common problem in online learning.

Example Peer Review Activity:

Students submit a short essay draft. Each student reviews two peers using a rubric. They give one strength and one specific improvement suggestion. The instructor reviews a sample of feedback to ensure quality.

Example Discussion-Based Assessment Prompt:

“Pick one idea from the lesson and apply it to a real example. Reply to one peer by extending their example or offering a counterpoint.”

Peer review works best with structure. A simple feedback format improves quality:

- One strength

- One question

- One suggestion

For discussions, prompts should require a stance and an example. If prompts are too broad, responses become generic and unhelpful.

9. Self-Assessment and Reflection Journals

Self-assessment asks learners to reflect on what they learned, where they struggled, and what they will do next. It can be done through weekly journals, short reflections after lessons, or periodic checklists.

Reflection adds value because it captures what scores cannot. Learners might do well but feel unsure, or do poorly but explain exactly where confusion began.

Example Reflection Prompts:

- “What concept became clearer this week?”

- “What is one thing you still feel unsure about, and why?”

- “What will you do differently next time?”

- “What strategy helped you learn this week?”

These prompts help you understand the learner mindset, confidence, and barriers. They also help learners build the habit of learning intentionally.

Keep reflections short and consistent. Two to five sentences is often enough.

10. Game-Based Assessment and Interactive Challenges

Game-based assessment uses mechanics like points, timed rounds, challenges, or team play to increase participation and make practice feel less intimidating.

This method works best for formative checks, review sessions, and repeated practice. It is especially useful when attention is low or when learners need repetition to master core concepts.

Example:

After a lesson, run a timed “find the mistake” challenge where learners identify errors in example solutions. This reveals misconceptions quickly and makes practice more engaging.

A few game formats that translate well online:

- Timed quiz rounds

- “Find the mistake” challenges

- Breakout-room competitions

- Mini scenario missions where correct answers unlock the next step

Always add a short debrief. Ask what was confusing and why. That reflection turns a fun activity into usable learning data.

Watch: How to Create a Timed Quiz

Best Practices for Running Online Assessments With Less Stress

Online assessment becomes much easier when a few core habits are in place. The best practices below help you gather clearer evidence, reduce unnecessary grading load, and keep learners engaged.

1. Start With One Clear Assessment Goal

Before building anything, decide what you want the assessment to reveal. Readiness, understanding, application, or mastery all require different formats.

For example, if you want application, scenario prompts reveal more than recall quizzes. If you want readiness, a diagnostic quiz is more useful than an essay.

2. Keep Checks Small and Frequent

Frequent, low-stakes checks reduce the risk of learners falling behind quietly. They also reduce pressure, which tends to improve participation and honesty.

Small checks can be as simple as a poll, a three-question quiz, or a two-minute exit ticket. These methods provide more actionable insight than one large assessment at the end.

3. Use Rubrics for Anything Subjective

Rubrics reduce guesswork. They also make grading faster and feedback more consistent.

Even a simple rubric with three to four criteria can improve consistency across essays, videos, projects, and peer evaluation. Learners also perform better when expectations are clear.

4. Mix Formats to Get a More Accurate Picture

One format rarely captures learning fully. Some learners perform poorly on timed quizzes but excel in projects or oral explanations. Others can write well but struggle to apply concepts.

A mix of methods improves accuracy and fairness while keeping learning more engaging.

5. Design Questions to Reveal Reasoning

Online environments make guessing easy. A few design upgrades reveal thinking without adding heavy grading.

Simple ways to do this:

- Ask learners to choose an option and write one sentence explaining why

- Use “best answer” prompts where judgment matters

- Add scenario context instead of asking definition-only questions

6. Use Integrity-Friendly Design Instead of Over-Policing

Security matters, but online assessment does not need to feel like surveillance. Design choices reduce cheating while keeping the experience learner-friendly.

Helpful approaches include question pools, randomized question order, shuffled options, and reasonable time limits. Application prompts and short explanations also make copying harder.

Watch: 9 Proven Strategies to Prevent Cheating in Assessments

7. Track Progress Over Time, Not One Score

Online assessment becomes more meaningful when you measure growth. A single score can mislead. Progress trends are more useful.

Practical ways to track progress:

- Compare pre-quiz and post-quiz performance

- Track recurring wrong answers across weeks using quiz reports & stats

- Use weekly reflections to monitor confidence and clarity

8. Give Feedback Learners Can Use Immediately

Scores alone rarely improve learning. Feedback does, especially when it is timely and specific.

A simple feedback pattern scales well:

- One thing done well

- One improvement area

- One next step

Even short feedback builds momentum, helps learners correct mistakes faster, and improves outcomes over time.

FREE. All Features. FOREVER!

Try our Forever FREE account with all premium features!

Assess Student Learning Online With Confidence

Online assessment works best when it provides clear evidence, supports learning, and fits into real teaching routines. Start small, assess frequently, and use results to guide what happens next. When assessment becomes a steady feedback loop, learning improves, and teaching becomes easier.

If you want a practical way to bring several of these methods together, ProProfs Quiz Maker can help you create quizzes, build question pools, run practice tests, and generate reports without extra complexity. The goal is not to test more. It is to understand learning better, earlier, and more consistently.

Frequently Asked Questions

How often should you assess student learning in an online class?

Assess in small ways after most lessons, so confusion does not build up silently. A quick poll, mini quiz, or exit ticket is usually enough. Save bigger assessments for the end of a unit or module, once learners have had time to practice.

How do you assess student learning without adding more grading work?

Rely on auto-graded checks for routine tracking, then add a few high-value tasks for depth. For example, assign one short scenario response per unit instead of frequent long assignments. A simple rubric keeps manual grading fast, consistent, and less exhausting.

How can you reduce cheating in online assessments?

Reduce the temptation to copy by designing questions that require thinking. Randomization and question pools help, but application prompts help more. Ask learners to explain a choice, show steps, or respond to a scenario. It becomes harder to cheat and easier to spot gaps.

What’s the difference between diagnostic, formative, and summative assessment?

Diagnostic assessment happens before instruction to check starting knowledge and gaps. Formative assessment happens during learning to guide feedback and adjust teaching. Summative assessment happens at the end to confirm mastery. Using all three gives you a clearer picture than relying on finals alone.

We'd love your feedback!

We'd love your feedback! Thanks for your feedback!

Thanks for your feedback!