Designing assessments at scale is a balancing act. You need to confirm real understanding, not just recall, and you need to do it across hundreds or even thousands of learners without slowing down operations.

From experience, I’ve found that the smartest assessment strategies don’t depend on just two or three quiz question types. They use multiple formats with intent. Essays bring depth, multiple-choice questions bring efficiency, and interactive formats drive engagement.

But the real strength lies in knowing when to use each. Choosing the right mix can make your quiz more interactive and far more insightful. And trust me, once you understand how each type works, creating impactful quizzes becomes a whole lot easier.

In this blog, I’ll guide you through selecting the best question types for each objective, ensuring your assessments deliver not just results, but actionable intelligence at scale.

Choosing the Right Quiz Question Types: Matching Format to Purpose

When it comes to designing assessments that actually measure learning, not just recall, your choice of quiz question types becomes the core strategy. Each format tests a different layer of cognition — from simple fact recognition to deep analytical reasoning — and the best question types are those that align directly with your learning objective.

In practice, I’ve found that the best way to design assessments is to divide question types into two broad categories: those optimized for speed and scalability (like multiple-choice) and those that assess depth and synthesis (like essays or case-based responses). It’s not about multiple choice vs subjective — it’s about using both strategically.

Auto-graded quizzes provide efficiency and instant feedback, while manually reviewed questions reveal insight that algorithms can’t. Blending them intelligently gives you the best of both: data you can trust and speed you can scale.

Popular Quiz Question Types

In this section, I’ll walk you through the different types of quiz questions—from simple MCQs to interactive image-based choices. I’ll also highlight the key benefits of each type and share practical design tips to help you use them more effectively.

High-Efficiency Quiz Question Types for Scale and Recall

These quiz question types form the backbone of large-scale testing programs. They’re quick to grade, easy to automate, and consistent across thousands of learners. I use them when the goal is to validate recall, comprehension, and procedural accuracy — efficiently and at scale.

1. Multiple-Choice Questions (MCQs)

MCQ tests are the cornerstone of scalable assessments. They let you test broad content efficiently while still allowing for complexity when designed thoughtfully. I often use them when I need to measure both recall and applied understanding at scale.

When to Use:

- When you need a balance of breadth and depth, covering many learning objectives in a single quiz.

- To test understanding of principles through scenario-based stems or problem-solving situations.

- Ideal for compliance training, product knowledge, or certification exams where objectivity and speed are critical.

Key Benefit:

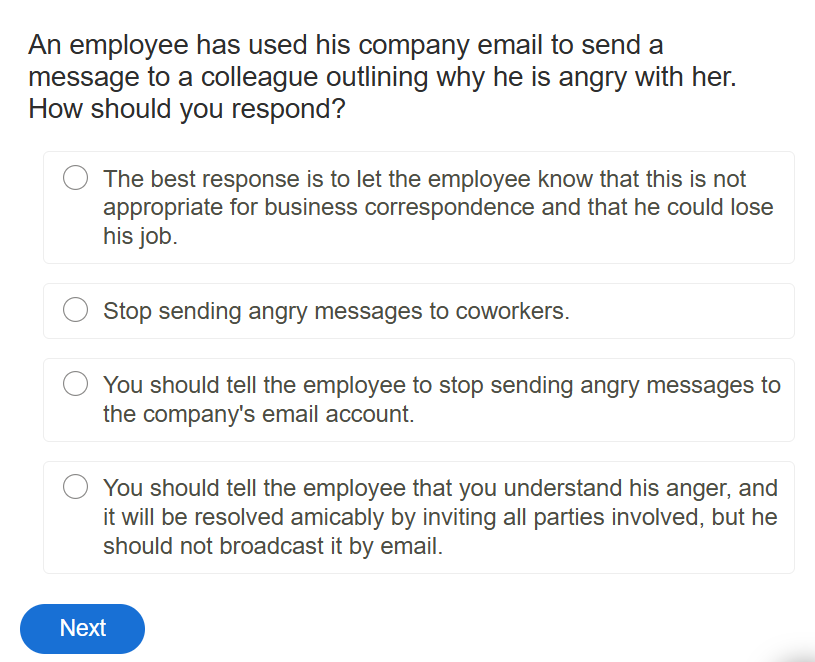

MCQs provide instant, reliable grading while supporting question variety — from simple recall to higher-order reasoning, depending on how you frame the stem.

Design Tip:

Make questions situational. For example, describe a real-world challenge and ask learners to choose the most effective response. This transforms recall into application.

Limitation:

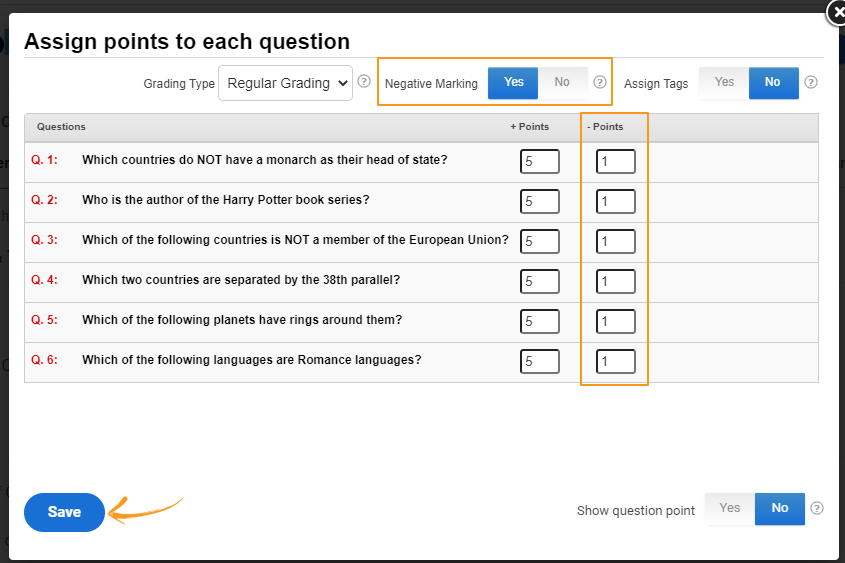

Poorly written distractors can make the right answer too obvious, reducing validity. For high-stakes tests, consider negative marking to discourage guessing.

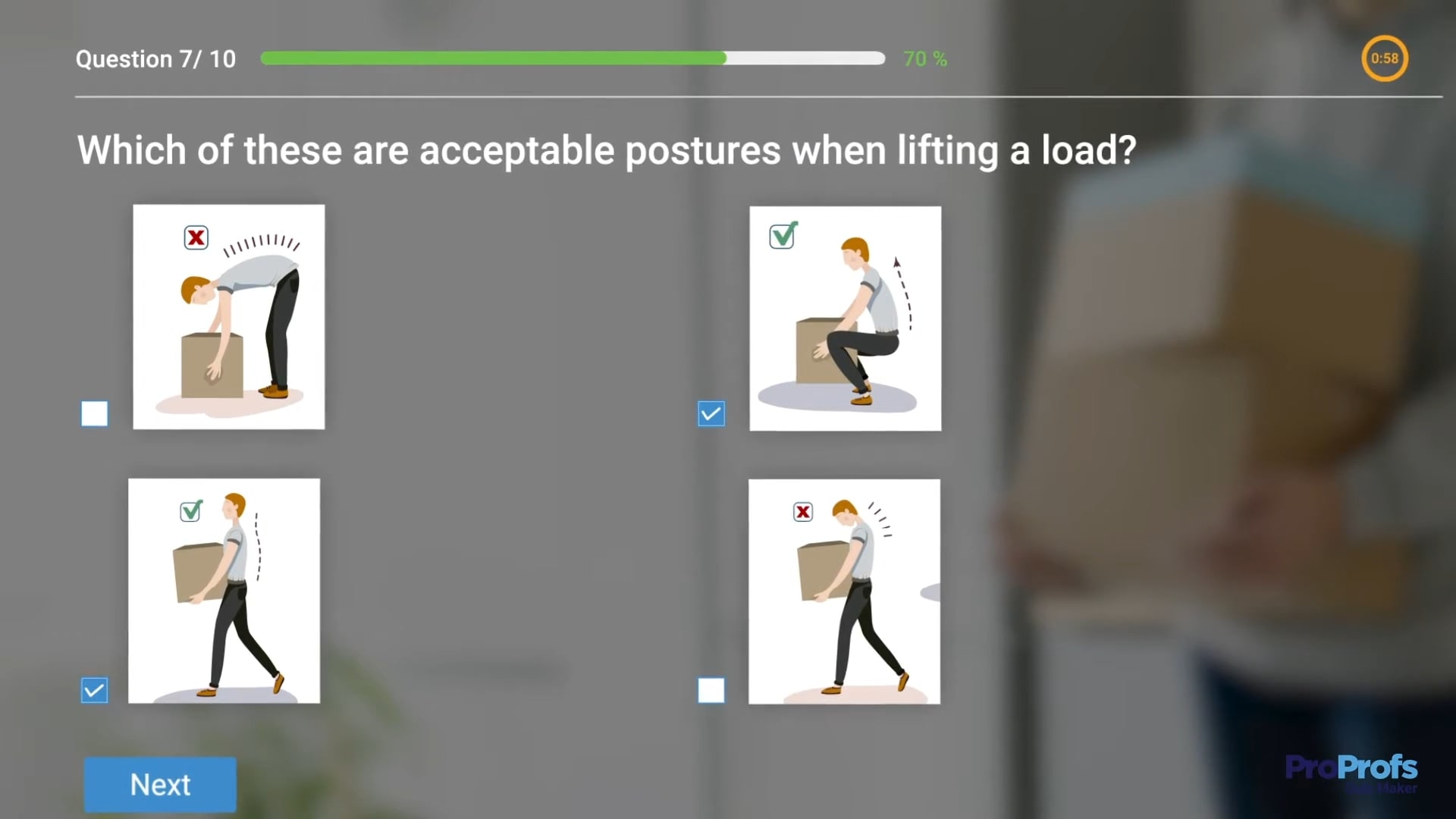

2. Checkbox (Multiple-Response)

Checkbox questions demand that learners identify all correct answers — not just one. This makes them inherently more rigorous than standard MCQs and excellent for assessing layered understanding.

When to Use:

- When knowledge involves multiple correct elements — for example, safety & compliance protocols, troubleshooting steps, or multi-criteria decisions.

- To assess comprehension of detailed processes or frameworks that rely on combinations of factors.

- Effective for intermediate-level learners who are building toward mastery.

Key Benefit:

Checkbox questions promote analytical thinking because learners must assess every option independently rather than guessing one answer.

Design Tip:

Enable partial grading to recognize partial mastery. This provides more diagnostic insight into how close a learner is to full understanding.

Limitation:

Ambiguity in the options can create confusion. Make sure each distractor is defensible and that “select all that apply” is clearly stated in the question.

3. True/False or Yes/No

True/False questions are quick and efficient for verifying basic facts or definitions. While not suitable for deep analysis, they serve as a useful warm-up or reinforcement tool in larger assessments.

When to Use:

- For low-stakes checks, such as validating foundational knowledge or quick knowledge refreshers.

- To introduce or conclude a module with simple binary decisions.

- In mobile learning or microlearning where brevity matters.

Key Benefit:

They’re fast to author, instantly graded, and ideal for maintaining engagement during frequent short quizzes.

Design Tip:

Keep statements unambiguous and free of qualifiers like “always” or “never,” which can bias the response toward one side.

Limitation:

The 50% guess rate makes this format unreliable for critical evaluation. Avoid using it for complex concepts or summative assessments.

4. Dropdown

Dropdown questions work like multiple-choice questions but display answer options in a compact list. They’re particularly effective when designing long or mobile-optimized quizzes where space and visual simplicity matter.

When to Use:

- In online training modules or mobile-friendly quizzes, where screen space is limited.

- When embedding quick checks inside reading passages, forms, or learning content.

- For factual or recall-based questions that don’t need long explanations or visuals.

Key Benefit:

Dropdowns make quizzes more streamlined and user-friendly, improving the flow of longer assessments without compromising scoring accuracy.

Design Tip:

Keep answer options short and clearly distinct. Avoid long or similar-looking options that force unnecessary scrolling on smaller screens.

Limitation:

Because options remain hidden until expanded, learners may miss context clues. Avoid using dropdowns for complex or multi-step questions that require comparing all choices.

5. Fill-in-the-Blanks

This format embeds one or more blanks within a sentence or paragraph, prompting learners to recall key terms, values, or concepts. It tests contextual understanding and attention to detail simultaneously.

When to Use:

- When assessing how well learners can recall terminology within a real-world context.

- For language learning, compliance content, or technical subjects where exact phrasing matters.

- In formative assessments that reinforce critical vocabulary or structured knowledge.

Key Benefit:

It tests recall in context, requiring learners to think about meaning and structure rather than isolated facts.

Design Tip:

Limit blanks to the most essential terms. Provide clear instructions on whether answers are case-sensitive and include acceptable variations (e.g., synonyms, abbreviations, or plural forms).

Limitation:

Too many blanks can turn a comprehension test into a memory exercise. Keep each question focused on 3–5 blanks for clarity and fairness.

6. Type-in (Short Text Entry)

This question type requires learners to type a brief response — a word or a short phrase — with or without contextual cues. It’s one of the purest ways to measure recall and comprehension.

When to Use:

- For subjects where learners must recall precise terminology, such as product names, formulas, or definitions.

- In professional training where terminology accuracy is crucial (e.g., medical, legal, or technical fields).

- When you want to discourage guessing by removing options entirely.

Key Benefit:

Encourages learners to retrieve knowledge from memory instead of recognizing it from a list, which leads to better retention.

Design Tip:

Account for multiple correct versions — such as spelling variants or abbreviations — by adding acceptable alternatives to your grading logic.

Limitation:

Strict auto-grading can penalize minor typos or valid variations. Always balance accuracy with fairness by defining accepted input patterns.

7. Numeric Response

Numeric response questions require learners to enter a number as their answer — such as a value, calculation result, or measurement. This format is ideal for quantitative reasoning, where accuracy and understanding of formulas matter.

When to Use:

- For math, science, engineering, or finance topics involving calculations or numeric reasoning.

- When evaluating a learner’s ability to apply formulas or convert between units.

- In technical assessments, where precision and accuracy reflect true competence.

Key Benefit:

Numeric questions measure both conceptual and procedural understanding. They test whether a learner can not only recall a formula but also execute it correctly.

Design Tip:

Allow small margins of error or define accepted ranges (e.g., 12.4–12.6). Include unit expectations (“Enter in meters”) to avoid confusion.

Limitation:

Strict auto-grading can penalize rounding differences or format errors. Define acceptable answer variations and rounding rules clearly.

8. Matching

Matching questions ask learners to connect related pieces of information — terms to definitions, theories to authors, or problems to solutions. They’re perfect for reinforcing relationships and structured understanding.

When to Use:

- When testing conceptual associations, such as cause-effect, function-application, or terminology-definition relationships.

- For subjects where understanding links between ideas is essential, such as history, compliance, or technical terminology.

- In formative quizzes aimed at reinforcing prior learning.

Key Benefit:

Matching questions offer wide content coverage in a compact format and help learners visualize relationships between concepts.

Design Tip:

Include more options in the answer column than the question column to prevent learners from solving by elimination. Shuffle both lists to maintain difficulty.

Limitation:

Too many pairs can overwhelm learners and reduce focus. Keep the number of matches between five and ten per question for optimal engagement.

Interactive &Visual Quiz Question Types for Applied Knowledge

Some learning goals can’t be measured with static text alone. That’s where interactive and visual question types come in. These formats let learners engage directly with images, media, and real-world scenarios — testing how well they can apply what they know rather than just recall it.

9. Drag the Words (Interactive Fill-in-the-Blanks)

This question type transforms a traditional fill-in-the-blank into a drag-and-drop activity. Learners drag words or phrases from a predefined word bank into the correct blanks within a sentence or passage.

When to Use:

- For vocabulary, terminology, or concept recall exercises.

- In language learning, onboarding, or quick compliance refreshers, where engagement boosts retention.

- When you want to test comprehension without penalizing minor spelling errors or typos.

- For creating a cloze test.

Key Benefit:

Keeps the quiz interactive and mobile-friendly while maintaining the recall challenge of fill-in-the-blanks.

Design Tip:

Include both correct and distractor words in the bank to increase rigor. Limit total options to a manageable number (8–12) to maintain flow and reduce screen clutter.

Limitation:

Word banks that are too small can make answers obvious; overly large ones can overwhelm learners. Striking a balance is key.

10. Drag-and-Drop Matching

This interactive version of the matching format adds visual engagement and learner participation. Instead of selecting from lists, learners physically drag items to their corresponding targets — which aids retention and attention.

When to Use:

- In visual or hands-on training, such as anatomy, geography, machinery components, or software UI labeling.

- For topics where visual association and interaction improve understanding.

- In gamified eLearning or microlearning, where engagement drives completion.

Key Benefit:

Encourages active learning and builds stronger connections between visual and textual information. It’s particularly effective for spatial or conceptual pattern recognition.

Design Tip:

Ensure draggable items are large enough for easy selection and that the interface works across devices. Provide visual feedback (“snap” or highlight) when items are placed correctly.

Limitation:

May not function consistently on all devices or with assistive technology. Depending on your quiz maker platform, you may need to provide a non-interactive fallback option, such as a standard matching format.

11. Sequencing and Ordering

Sequencing questions test learners’ understanding of order, process, or chronology. They assess not just knowledge but also comprehension of flow — making them powerful for testing procedural or conceptual understanding.

When to Use:

- When evaluating mastery of a step-by-step process, workflow, or timeline.

- In compliance, operations, or manufacturing training where the sequence determines accuracy.

- For historical subjects, troubleshooting flows, or software process training, where the learner must understand logical progression.

Key Benefit:

Reveals whether learners understand relationships between steps, not just individual actions. It’s ideal for assessing structured thinking and procedural logic.

Design Tip:

Keep the list concise — five to seven steps is optimal. Use realistic, slightly confusing distractors (e.g., similar steps) to ensure learners truly understand the correct order.

Limitation:

Long or poorly defined sequences can create fatigue or random guessing. Make sure every step is clearly distinct and contextually necessary.

12. Hotspot

Hotspot questions ask learners to identify a specific area on an image or diagram. They’re excellent for testing visual understanding, such as locating a part of a machine or identifying a region on a map.

When to Use:

- For technical, medical, or safety training involving visual identification.

- When spatial understanding is a key learning outcome.

- To test knowledge of physical layouts, product features, or human anatomy.

Key Benefit:

Turns visuals into active learning tools by making recognition measurable.

Design Tip:

Ensure hotspot areas are large enough for accuracy but precise enough to challenge attention to detail.

Limitation:

Click sensitivity varies by device — test thoroughly on mobile and tablet.

13. Image Choice

This question type replaces text answers with image-based options, helping learners make visual associations quickly and intuitively.

When to Use:

- To identify objects, symbols, or visual cues in branding, geography, or anatomy.

- For early-stage learners or visual thinkers who grasp concepts better through imagery.

- When you want to make the quiz more engaging without adding complexity.

Key Benefit:

Enhances engagement and retention through visual reinforcement.

Design Tip:

Use high-quality, evenly styled images so that no option unintentionally stands out.

Limitation:

Too many image options can clutter the interface — limit to 4–6 per question.

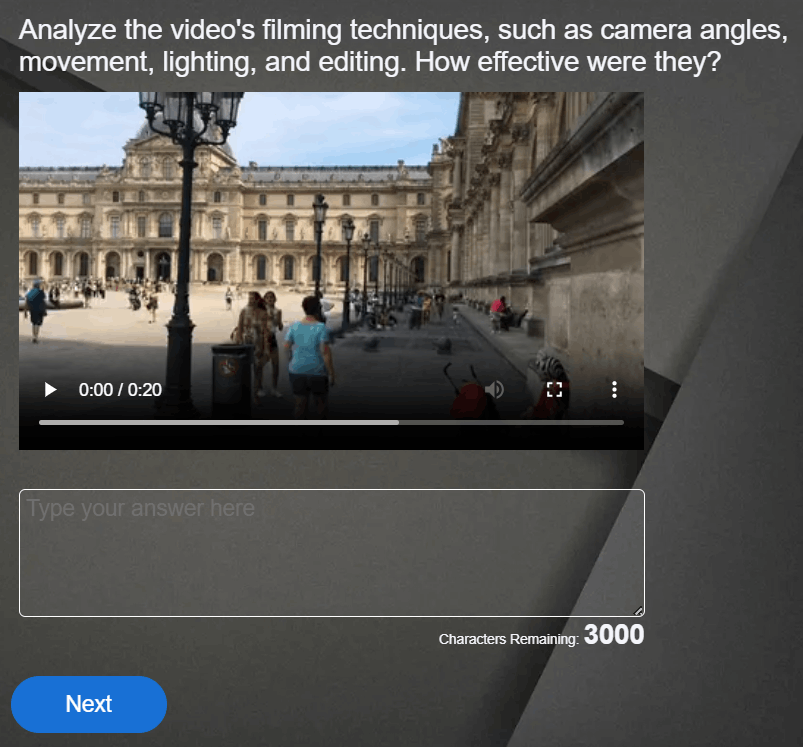

14. Watch/Listen & Answer

This multimedia format embeds short videos or audio clips and asks learners to respond to follow-up questions. It bridges knowledge and application by testing observation, comprehension, and analytical listening.

When to Use:

- In compliance, customer service, or soft-skills training.

- For scenario-based learning, where learners must evaluate context.

- To assess listening comprehension or nonverbal communication skills.

Key Benefit:

Engages multiple senses and promotes deeper cognitive processing.

Design Tip:

Keep clips short and directly tied to the learning objective.

Limitation:

Requires stable playback and accessibility features like captions or transcripts.

Watch: How to Create a Video Quiz

High-Rigor Quiz Question Types for Critical Thinking and Synthesis

Some learning outcomes can’t be auto-graded — and they shouldn’t be. These quiz question types are designed to measure deeper reasoning, problem-solving, and creative thought. Use them when you need to evaluate not just what someone knows, but how they apply it, explain it, or create something new from it.

15. Audio Response (Record/Upload Audio)

Instead of typing, learners record short voice responses to demonstrate communication and comprehension skills.

When to Use:

- In language training, pronunciation, or verbal communication assessments.

- For empathy-based customer support simulations or listening exercises.

- To encourage spoken articulation over written recall.

Key Benefit:

Captures tone, fluency, and confidence — elements text can’t measure.

Design Tip:

Allow re-recording once or twice to ease performance pressure. Provide clear prompts (“Summarize this clip in 30 seconds”).

Limitation:

Manual grading is required, and background noise can impact evaluation quality.

16. Video Response (Record/Upload Video)

Learners respond by recording or uploading a video. Video response questions evaluate presentation, reasoning, and body language — ideal for communication-heavy roles.

When to Use:

- For leadership, interview, or behavioral training modules.

- In performance reviews, where learners explain a decision or strategy.

- To capture how learners present ideas under realistic conditions.

Key Benefit:

Reveals both content knowledge and delivery skills in one format.

Design Tip:

Limit duration to a few minutes and offer recording or upload guidelines for consistency.

Limitation:

Requires manual review and bandwidth — ensure compression without losing quality.

Watch: How to Create a Video Response Question

17. Document or Image Upload

This question type allows learners to upload external files — such as reports, charts, or creative assets — for manual review. It’s ideal for verifying applied learning outcomes.

When to Use:

- For project-based assessments, practical assignments, or certifications.

- In design, engineering, or writing evaluations requiring real work samples.

- To capture work that goes beyond what’s possible within a quiz interface.

Key Benefit:

Encourages learners to produce tangible deliverables that demonstrate applied competence.

Design Tip:

Specify accepted file formats (e.g., PDF, DOCX, JPG) and maximum file size upfront.

Limitation:

Requires human evaluation and storage space. Use this selectively in graded modules.

18. Essay (Long Text Response)

Essay questions ask learners to construct detailed written answers. They’re ideal for evaluating comprehension, reasoning, and the ability to organize thoughts clearly.

When to Use:

- In academic or leadership training, where argumentation and synthesis matter.

- To assess critical thinking, reflection, or problem-solving skills.

- When learners must justify a decision, critique a scenario, or explain a concept in depth.

Key Benefit:

Reveals thought process, depth of understanding, and clarity of reasoning — something no auto-graded question can capture.

Design Tip:

Provide a clear rubric for grading and set length expectations (e.g., “150–200 words”). For large-scale assessments, pair essays with auto-graded sections to balance depth and efficiency.

Limitation:

Manual evaluation is required, and scoring consistency depends on a clear rubric and trained reviewers.

19. Case Study or Scenario Response

This question type presents a real-world scenario and asks learners to analyze it, propose a solution, or explain their decision-making. It mirrors practical problem-solving situations.

When to Use:

- In leadership, management, or customer-facing training.

- For ethics, compliance, or conflict-resolution scenarios.

- Whenever you want to measure reasoning and applied decision-making.

Key Benefit:

Encourages situational thinking — learners apply theory to realistic problems, demonstrating judgment and analysis.

Design Tip:

Keep scenarios concise but rich in context. Use branching questions (e.g., “What would you do next?”) to deepen insight without overwhelming learners.

Limitation:

Requires manual scoring but delivers powerful qualitative data about how learners think.

20. Comprehension

Comprehension questions test the learner’s ability to interpret, analyze, and apply information from a given passage, document, or dataset. Unlike simple recall, they assess how well learners can extract meaning, identify patterns, and draw logical conclusions.

When to Use:

- To evaluate critical reading or analytical reasoning skills.

- For compliance or academic modules where learners must interpret written or technical material.

- In professional training, where reading and interpreting data sheets, memos, or case summaries are common.

Key Benefit:

Goes beyond surface-level understanding — measures whether learners can interpret nuance, context, and intent.

Design Tip:

Keep passages concise (under 300 words) and structure follow-up questions progressively — from fact-based to inference-based. For longer texts, use separate “Read the Doc & Answer” formats to prevent fatigue.

Limitation:

Creating balanced question sets that test both recall and reasoning requires careful curation of source material.

Enhancing the Best Question Types With Diagnostic and Custom Scoring

Well-designed assessments don’t just measure performance — they explain it. Diagnostic and custom scoring help you uncover learning patterns, identify skill gaps, and drive targeted improvement. Instead of treating results as final grades, this approach turns your assessments into data engines that guide action.

Here’s how to design a scoring system that delivers clarity, fairness, and depth.

1. Categorize Questions by Learning Objective

Tag every question to a specific skill or outcome — such as compliance, product knowledge, or problem-solving. This lets you evaluate mastery by topic rather than just overall score.

Action Tip:

Create question categories before authoring your quiz. When reviewing results, analyze performance per category to see where learners excel or struggle.

Case Study:

Christian, a U.S. history teacher from Michigan, organizes his question bank by categories to manage hundreds of items efficiently. This setup lets him randomize quizzes, reuse questions year after year, and track topic-based scores to pinpoint where students need more focus — all without rebuilding his tests from scratch.

Watch: From 15 Years of Paper Tests to Easy Digital Assessments | ProProfs Case Study

2. Apply Weighted Scoring to Reflect Importance

Not every question should carry equal value. Assign a higher weight to scenario-based or decision-making items and a lower weight to recall questions.

Action Tip:

Use a simple structure like:

- 1 point for recall

- 3 points for application

- 5 points for decision-making or scenario analysis

Weighted scoring ensures your assessments measure what truly matters.

3. Use Custom Scoring for Closed-Ended Questions

Close-ended formats like multiple-choice or dropdowns often have “almost correct” answers that reveal partial understanding. Custom scoring lets you assign point values accordingly — rewarding reasoning and discouraging guesswork.

Example:

Let’s say the question is: “You notice a loose wire sparking near a workstation. What’s the most appropriate action to take?”

- A. Immediately shut off the power and report the hazard to maintenance. → +3 points (best response – prioritizes safety and escalation)

- B. Step away, alert your supervisor, and wait for instructions. → +2 points (partially correct – safe but passive)

- C. Mark the area as unsafe and continue with other tasks. → –1 point (unsafe action – avoids immediate resolution)

- D. None of the above. → 0 points (neutral response – avoids guessing but adds no value)

Action Tip:

Test custom point distributions with a pilot group to ensure they reflect real-world performance.

4. Enable Partial Credit for Multi-Step Thinking

For multi-response or numeric questions, partial scoring ensures progress is recognized. Learners who get most — but not all — of a complex answer correct still earn proportional credit.

Action Tip:

Award points based on the percentage of correct elements (e.g., 4 out of 5 correct = 80%). This encourages persistence and provides richer insight into learning depth.

5. Reward Engagement Through Bonus Points

Motivate learners by rewarding consistent participation and effort. Bonus points can promote completion, speed, or voluntary challenge attempts.

Action Tip:

Offer points for:

- timely completion

- solving advanced or optional challenges

This builds healthy competition and keeps learners engaged through longer training programs.

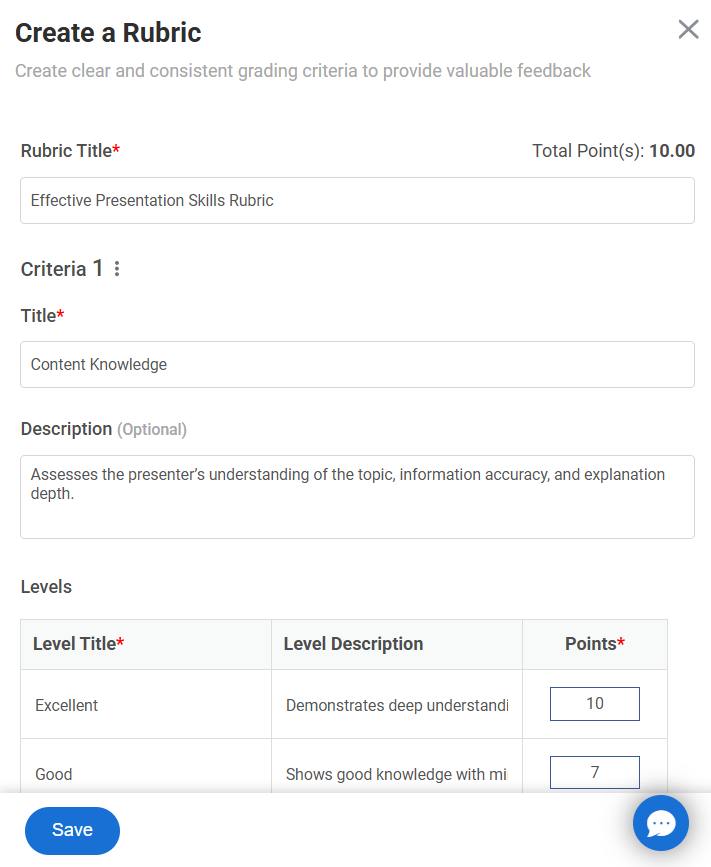

6. Apply Rubrics for Subjective Question Types

For essays, open-ended, or video responses, use rubrics to standardize grading. Rubrics outline evaluation criteria (like clarity, accuracy, and critical thinking) and define performance levels with assigned point ranges.

Action Tip:

- Define 3–5 key criteria tied to learning outcomes.

- Set performance levels (e.g., Excellent, Good, Needs Improvement).

- Assign clear point values for each level to ensure fairness and consistency.

Rubrics bring objectivity to subjective tests, ensuring feedback is structured, transparent, and aligned with learning goals.

7. Turn Scores Into Actionable Insights

Scores become meaningful only when they drive improvement. Use diagnostic data to personalize feedback or trigger follow-up learning paths.

Action Tip:

Automatically assign microlearning modules or refresher courses to those scoring below a target threshold in specific skill categories.

8. Track Trends, Not Just Scores

Over time, diagnostic scoring highlights growth patterns and content gaps. Analyze recurring weak areas to identify whether the issue lies in the learner or the material.

Action Tip:

Compare performance data across cohorts or sessions to pinpoint where training content may need revision.

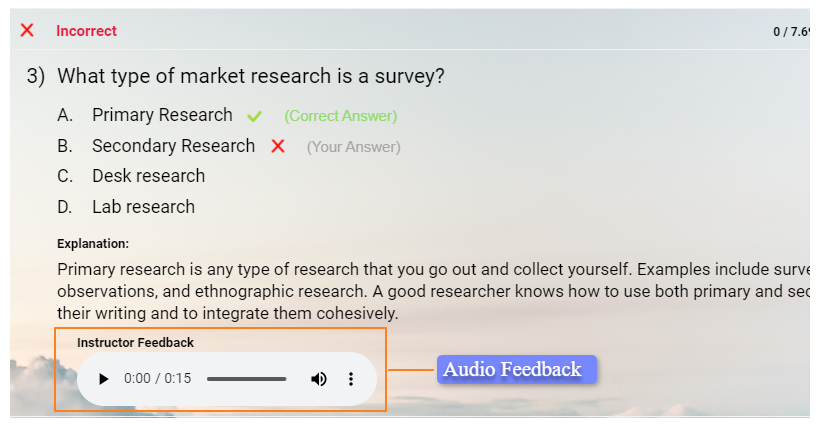

9. Build Feedback That Reinforces Learning

Pair your scoring system with targeted, instructive feedback. Instead of “Correct/Incorrect,” guide learners toward improvement.

Example:

“You identified the issue correctly, but missed the escalation step. Revisit section 2.3 for proper procedure.”

This transforms feedback into a learning loop — not just a score report.

Pick the Right Question Types for Smarter Assessments

Choosing the right quiz question types is about intention, not variety. Each format serves a purpose — some test recall, others assess reasoning or decision-making. When used together, they turn quizzes into meaningful tools that reveal how people learn, not just what they remember.

Pairing diverse question types with diagnostic and custom scoring helps you interpret results with clarity. Instead of static grades, you get patterns — insights that show progress, surface skill gaps, and inform better teaching or training decisions.

With tools like ProProfs Quiz Maker, you can build these systems efficiently. Mix formats, apply flexible scoring, and analyze results at scale to understand performance in context. When your assessments are designed with this level of precision, they don’t just measure learning — they strengthen it.

Frequently Asked Questions

How do I decide between multiple-choice vs subjective questions?

Choose multiple-choice when you need objective, scalable grading. Opt for subjective formats like essays when evaluating reasoning, clarity, or synthesis. A balanced assessment usually combines both — using objective questions for breadth and subjective ones for depth.

How can I make auto-graded questions test higher-level critical thinking, not just basic recall?

Use scenario-based stems (memory-plus application) in Multiple-Choice Questions. Place the concept in a realistic context, forcing the learner to apply principles or rules to solve a problem, which elevates the assessment beyond simple fact retrieval.

What are Level 3 type questions?

Level 3 questions test higher-order thinking — such as analysis, evaluation, and synthesis — based on Bloom’s Taxonomy. Instead of recalling facts (Level 1) or explaining concepts (Level 2), learners must apply reasoning, interpret data, or justify conclusions. These questions often take the form of case studies, scenario responses, or essay-style prompts.

What’s the ideal mix of quiz question types in a single assessment?

There’s no universal formula, but a balanced mix often includes 60–70% auto-graded (MCQ, checkbox, fill-in) and 30–40% manual (essay, scenario) formats. The right balance depends on whether your goal is knowledge validation or skill evaluation.

We'd love your feedback!

We'd love your feedback! Thanks for your feedback!

Thanks for your feedback!