When I talk to educators, one thing is clear—educational assessment is often misunderstood. Too many people still think it’s just end-of-term tests or standardized exams. In reality, it’s one of the most powerful tools we have to shape learning, not just measure it.

I’ve worked with teachers, schools, and training teams who’ve used assessments to spot trends, personalize learning, and even turn around underperforming programs. The right approach can improve student engagement, make teaching more efficient, and give decision-makers data they can actually use.

In this guide, I’ll unpack what educational assessment really means today, explore the different types, tackle common challenges, and share practical steps for creating assessments that work—whether you’re teaching in a classroom, online, or somewhere in between.

What Is Educational Assessment?

Educational assessment of students is more than a score—it’s the process of collecting and using information to understand how well students are learning and how effectively we’re teaching.

It can be as simple as a quick quiz or as complex as an adaptive, AI-driven evaluation.

Watch: How to Create an Online Quiz in Under 5 Mins

From my experience, the best educators don’t see assessments as a final hurdle—they see them as ongoing checkpoints. They use them to identify strengths, close learning gaps, and adjust strategies in real time. Done right, assessments become a feedback loop that benefits both students and teachers.

Whether it’s diagnostic tests before a lesson, formative assessments during a unit, or summative exams at the end, the goal is the same: make informed decisions that improve learning outcomes.

In today’s classrooms—physical or virtual—educational assessment is the engine driving continuous improvement.

Importance of Educational Assessment

I’ve worked with enough educators to know that even the best lesson plans can fall flat if you can’t see what’s really happening with your students. A good educational assessment process fixes that. It gives you real-time insight into learning, so you can act before it’s too late.

Here’s why it matters:

- Reveals learning gaps early – Spot misunderstandings before they become bad habits.

- Highlights strengths – Identify areas where students excel and build on them.

- Keeps learners engaged – Use interactive, varied assessments to maintain momentum.

- Improves teaching strategies – Adjust your approach based on what’s working—and what’s not.

- Drives data-backed decisions – From curriculum design to resource allocation, make choices with confidence.

- Meets compliance and standards – Ensure alignment with academic or industry benchmarks.

In today’s hybrid classrooms and fast-moving learning environments, this kind of visibility isn’t optional—it’s your competitive advantage.

What Are the Different Types of Educational Assessment?

No single type of assessment can give you the full picture. The most successful schools and training programs I’ve worked with use a carefully balanced mix—some to establish a baseline, some to guide progress, and some to evaluate final outcomes.

Below, I’ll break down each type, how it works, where it shines, and how you can use it to get real results.

1. Diagnostic Assessment – Setting the Starting Point for Success

Before you start teaching a new topic, stop and ask yourself: Do I know exactly what my students already understand, and where they might struggle? Without that clarity, you risk spending time on material they’ve already mastered—or worse, skipping over critical gaps that will hold them back.

That’s where diagnostic assessments come in.

What It Is:

A diagnostic assessment for education happens before instruction begins. It’s designed to measure a learner’s current knowledge, skills, and misconceptions so you can plan lessons that meet them where they are. Think of it as your GPS “starting location” for the learning journey.

Why It Matters:

- Helps you avoid one-size-fits-all teaching.

- Prevents boredom for advanced learners and frustration for beginners.

- Gives you a clear baseline to measure progress later.

Examples:

- In schools: A pre-unit math quiz to check mastery of prerequisite concepts.

- In higher ed: A writing skills diagnostic to identify who needs extra support.

- In training programs: A safety knowledge checklist before compliance training.

Tips for Making It Work:

- Keep it short – Limit diagnostics to 10–15 well-chosen questions. A lean assessment keeps students engaged and makes results easier to analyze.

- Align with essential skills – Focus only on the concepts and abilities that will directly influence your upcoming lessons, rather than testing everything.

- Share results – Let learners see their starting point. This builds transparency and helps them understand the purpose of the assessment.

- Group strategically – Use the data to organize learners by ability so you can tailor your instruction effectively.

- Track growth – Revisit the same concepts later in the course to measure progress against the baseline.

When done well, a diagnostic assessment isn’t just an opening exercise—it’s your best tool for designing instruction that’s targeted, efficient, and relevant.

2. Formative Assessment – Guiding Learning in Real Time

Imagine teaching for weeks, only to find out at the end that half your students misunderstood a key concept. By then, it’s too late to fix it. Formative educational assessments prevent that from happening.

What It Is:

A formative assessment is a low-stakes, ongoing check that helps you see how well learners are grasping the material while you’re still teaching it. Instead of waiting for a final exam, you get quick, actionable insights that allow you to adjust on the fly.

Why It Matters:

- Catches misunderstandings early, when they’re easiest to correct.

- Keeps learners actively engaged with the material.

- Builds a feedback loop between you and your students.

Examples:

- In schools: An exit ticket where students write down one thing they learned and one question they still have.

- In higher ed: Weekly quizzes, flashcards, or polls that provide instant feedback.

- In training programs: Live polls or quick scenario questions during a workshop.

Tips for Making It Work:

- Clarify the purpose – Make it clear that formative assessments are for improvement, not grades, so students feel safe to make mistakes.

- Act immediately – Use results to make same-day adjustments to your teaching or offer targeted support where it’s needed.

- Give specific feedback – Provide feedback that clearly identifies what’s working, what’s not, and what the next step should be.

- Mix formats – Mix peer reviews, flashcards, short reflections, polls, and various question formats to keep activities engaging and inclusive for different learning styles.

Formative assessments turn every lesson into a two-way conversation. They don’t just measure progress—they help create it.

Watch: Question Types for Online Learning & Assessment

3. Summative Assessment – Measuring the Final Outcome

Every learning journey needs a finish line. Summative assessments are where you measure how much your students have learned after all the teaching is done.

What It Is:

A summative assessment evaluates learning at the end of a unit, term, or course. It’s the big-picture snapshot—showing whether learners have met the goals you set at the beginning.

Why It Matters:

- Provides a clear measure of achievement.

- Helps you evaluate the effectiveness of your teaching or training program.

- Informs important decisions like advancement, certification, or program improvements.

Examples:

- In schools: End-of-unit tests or state-level exams.

- In higher ed: Capstone projects, final research papers, or comprehensive exams.

- In training programs: Certification tests or practical skills demonstrations.

Tips for Making It Work:

- Align with objectives – Test only the skills and knowledge taught during the course to keep assessments fair and relevant.

- Mix question types – Include both recall-based and application-based items for a more complete picture of learning.

- Compare to baseline – Look at results alongside diagnostic data to measure actual growth rather than just final performance.

- Refine for next time – Use summative data to adjust content, pacing, or methods for future cohorts.

Summative educational assessments are high stakes, but they don’t have to be high stress. When they’re aligned, varied, and paired with earlier checkpoints, they become a fair and accurate measure of true learning.

Formative vs Summative vs Diagnostic Assessments

| Feature | Diagnostic Assessment | Formative Assessment | Summative Assessment |

|---|---|---|---|

| Purpose | Establish a baseline before instruction begins | Improve learning while it’s happening | Measure learning after instruction ends |

| Timing | Before a unit, course, or training starts | Ongoing, throughout the learning process | At the end of a unit, course, or training |

| Focus | Identifying prior knowledge, skills, and misconceptions | Spotting gaps, guiding teaching, and providing support | Evaluating overall mastery of content |

| Feedback | Used to plan instruction; not usually shared in detail with grades | Immediate and actionable for both teacher and learner | Often delayed, summarizing performance |

| Examples | Pre-tests, skill checklists, entry surveys | Exit tickets, quick polls, peer reviews, group discussions | Final exams, standardized tests, capstone projects |

| Impact on Grades | Usually ungraded | Usually ungraded or low-stakes | Typically graded and high-stakes |

| Learner Involvement | Moderate—students complete tasks to show starting point | High—learners actively reflect and adjust | Passive—learners complete and move on |

Watch: How to Create Online Tests or Exams

4. Standardized Assessment – The Common Benchmark

Sometimes you need to see how your learners measure up against a larger group—not just within your own classroom or organization. That’s where standardized assessments come in.

What It Is:

A standardized assessment is designed, administered, and scored in the same way for every test-taker. This consistency allows you to compare results across classes, schools, regions, or even countries.

Why It Matters:

- Provides a common benchmark for evaluating performance at scale.

- Helps identify broad learning trends and gaps.

- Useful for meeting state, national, or industry compliance requirements.

Examples:

- In schools: Statewide reading or math exams.

- In higher ed: SAT, ACT, GRE, or other entrance exams.

- In training programs: Industry-standard safety or compliance tests.

Tips for Making It Work:

- Use as one data point – Combine standardized results with other assessment types to avoid over-reliance on a single score.

- Prepare learners – Familiarize students with both the content and the format of the test to reduce anxiety.

- Analyze patterns – Look for trends in the data to identify systemic strengths and weaknesses, not just individual scores.

- Balance formats – Pair standardized testing with creative or performance-based tasks to capture a wider skill set.

Standardized assessments can reveal valuable insights at scale, but they’re most powerful when combined with more personalized measures of learning.

5. Performance-Based Assessment – Showing What Learners Can Do

Some skills can’t be measured by multiple-choice questions. That’s where performance-based assessments shine—they ask learners to demonstrate their abilities in action.

What It Is:

A performance-based educational assessment requires learners to apply their knowledge and skills to complete a task or solve a real-world problem. It measures both the process and the end result.

Why It Matters:

- Tests practical skills, not just theoretical knowledge.

- Builds learner confidence by connecting lessons to real applications.

- Often better reflects readiness for the next step—whether that’s a job, a project, or higher-level coursework.

Examples:

- In schools: Designing and presenting a science experiment, performing in a school play, or participating in a debate.

- In higher ed: Engineering students building prototypes, art students curating exhibitions.

- In training programs: Sales teams delivering a mock pitch, IT staff troubleshooting a simulated system outage.

Watch: How to Create a Video Interview Quiz

Tips for Making It Work:

- Create clear rubrics – Define criteria for both the process and the final product so scoring is consistent and transparent.

- Make it realistic – Use scenarios that mirror actual challenges learners might face outside the classroom.

- Offer feedback loops – Build in opportunities for self-review and peer feedback to deepen reflection and learning.

- Document performances – Record presentations or tasks so they can be reviewed for more thorough evaluation.

Performance-based assessments bridge the gap between learning and doing, turning knowledge into capability.

6. Norm-Referenced, Criterion-Referenced, and Ipsative Assessments – Framing the Results

How you compare and interpret assessment results changes the entire story they tell. These three approaches give you different lenses for evaluating performance.

What They Are:

- Norm-referenced: Compares a learner’s performance to that of a larger group, showing where they rank (e.g., percentile scores).

- Criterion-referenced: Measures performance against a fixed set of standards or objectives (e.g., “80% mastery required to pass”).

- Ipsative: Compares a learner’s current performance to their own previous performance, focusing on personal growth.

Comparison at a Glance:

| Criteria | Norm-Referenced | Criterion-Referenced | Ipsative |

|---|---|---|---|

| Purpose | Rank learners relative to a group | Measure against set standards or objectives | Measure personal improvement over time |

| Score Interpretation | Based on percentile or ranking | Based on mastery (pass/fail or score threshold) | Based on change from previous performance |

| Content Scope | Broad, often covering multiple skills or topics | Narrow, aligned with specific learning objectives | Varies; focuses on learner’s individual trajectory |

| Best For | Selection, placement, identifying high/low performers | Certification, standards compliance, curriculum alignment | Motivation, personal goal setting, growth tracking |

| Example | National percentile on SAT | 80% required to pass a driving test | Comparing early and final drafts of an essay |

Why It Matters:

- Norm-referenced results show competitiveness or ranking.

- Criterion-referenced results show mastery of specific skills.

- Ipsative results highlight progress, which can boost motivation even for struggling learners.

Tips for Making It Work:

- Match to goals – Choose the comparison method that best fits your assessment’s purpose, whether it’s ranking, mastery, or growth.

- Use ipsative for growth – Highlight personal improvement to build confidence and motivation in learners.

- Combine methods – For high-stakes situations, use both norm- and criterion-referenced approaches for a balanced view.

- Be transparent – Explain how performance is being measured so learners feel the process is fair.

The lens you choose shapes how results are interpreted—and how learners feel about their own progress.

7. Alternative & Authentic Assessments – Beyond Traditional Tests

Not all learning can—or should—be measured with multiple-choice questions. Some skills, like creativity, collaboration, and problem-solving, require assessments that mirror real-world tasks. That’s where alternative and authentic assessments come in.

What They Are:

- Alternative Assessments: Any method outside traditional test formats, often more flexible and student-centered.

- Authentic Assessments: Tasks that closely replicate real-world applications of knowledge and skills.

Why They Matter:

- Capture skills traditional tests overlook.

- Give learners the chance to show their abilities in context.

- Build connections between classroom learning and real-life application.

Examples:

- In schools: Student portfolios, group projects, debates, or science fairs.

- In higher ed: Case study analysis, field research, or design presentations.

- In training programs: Client simulations, project pitches, or live demonstrations.

Tips for Making Them Work:

- Align with objectives – Make sure the task directly measures the skills and knowledge you want to assess.

- Create clear rubrics – Outline exactly what success looks like so scoring is consistent.

- Offer choice – Give learners options for how to demonstrate mastery, boosting engagement and ownership.

- Encourage reflection – Ask learners to explain their thinking or process to reveal deeper understanding.

When well-designed, alternative and authentic educational assessments don’t just measure learning—they extend it, preparing students for the skills they’ll need beyond the classroom.

8. Adaptive Assessment – Personalizing the Path

One-size-fits-all testing doesn’t work for every learner. Adaptive assessments change that by adjusting difficulty in real time based on how a learner responds.

What It Is:

An adaptive assessment uses algorithms or AI to personalize the test experience. If a learner answers correctly, the system offers a more challenging question; if they struggle, it presents an easier one. The goal is to pinpoint the learner’s true level without making the test unnecessarily long or frustrating.

Why It Matters:

- Keeps learners in their optimal challenge zone.

- Reduces frustration for struggling students and boredom for advanced ones.

- Can provide a more accurate picture of skill mastery in less time.

Examples:

- In schools: Computer-based reading programs that adjust difficulty with each question.

- In higher ed: Adaptive math testing that homes in on a student’s problem areas.

- In training programs: Personalized compliance modules that skip known content.

Tips for Making It Work:

- Explain the process – Tell learners how adaptive testing works to build trust and avoid confusion.

- Pair with other methods – Use adaptive tools alongside formative and performance-based assessments for a complete picture.

- Monitor accuracy – Check regularly that the adaptive system’s difficulty adjustments are functioning correctly.

- Ensure accessibility – Make sure the technology works for all learners, including those who require assistive tools.

Adaptive assessments are powerful, but they work best when learners trust the process and when they’re part of a broader, varied assessment strategy.

Key Challenges and Concerns in Educational Assessment

Understanding the different types of educational assessment is only half the story. In real classrooms and training environments, these assessments face challenges that can undermine their effectiveness.

Based on educator feedback, customer voice data, and insights from professional discussions, here are the most common concerns—and how to address them.

1. Over-Testing in Education

Excessive testing can narrow the curriculum, create student anxiety, and reduce time for deeper learning. Educators and instructional leaders warn that frequent high-stakes exams often leave less space for creativity, critical thinking, and collaboration.

How to Address It:

- Use a balanced mix of assessment types so no single format dominates.

- Replace some high-stakes exams with authentic assessments or project-based tasks.

- Focus on assessment for learning—providing feedback during the process—rather than just assessment of learning.

2. Assessment Bias and Curriculum Misalignment

Students often report that certain tests feel disconnected from what was taught, or include “trick questions” that don’t reflect learning objectives. This misalignment can hurt credibility and student motivation.

How to Address It:

- Develop a clear blueprint linking every test item to specific learning objectives.

- Review assessments for cultural, language, and context bias.

- Gather post-assessment feedback from learners to identify misalignment.

3. Time Constraints in Educational Assessment

Educators and trainers frequently cite time limitations as a barrier to conducting varied and meaningful assessments. Large-scale tests often dominate limited instructional time, leaving less space for formative or authentic methods.

How to Address It:

- Use shorter, targeted assessments to gather the same insights in less time.

- Leverage technology for auto-grading and instant feedback.

- Integrate assessment into learning activities so testing doesn’t feel separate from teaching.

4. Accessibility and Assessment Tools

Not all learners have equal access to the technology or resources needed for modern assessments. Teachers and trainers also note usability concerns with some online platforms.

How to Address It:

- Choose quiz makers for teachers that work across devices and internet speeds.

- Provide offline or low-tech alternatives where needed.

- Test tools for accessibility with screen readers and other assistive technologies.

5. Feedback Quality in Educational Assessment

Some educators provide only grades, with little actionable feedback. Students value timely, specific feedback that helps them understand how to improve.

How to Address It:

- Give feedback that is clear, targeted, and actionable—avoid vague comments like “good job” or “needs work.”

- Use rubrics to standardize feedback and set clear expectations.

- Build feedback into the learning process so it’s ongoing, not just after the final grade.

6. Concerns With Adaptive Testing

While adaptive assessments can personalize learning, some students feel they’re unfair because not everyone receives the same questions. Others struggle with the “hidden” nature of difficulty adjustments.

How to Address It:

- Clearly explain how adaptive testing works before the assessment begins.

- Pair adaptive testing with a traditional component for transparency.

- Use adaptive systems for formative purposes rather than for high-stakes evaluation.

7. Criticism of Automated Essay Scoring (AES)

Instructors have raised concerns that AES can over-rely on surface features like grammar and length, sometimes rewarding nonsensical writing. Many worry it may undervalue creativity.

How to Address It:

- Use AES for initial scoring or practice, but include human review for high-stakes writing tasks.

- Calibrate AES tools to match your rubrics and priorities.

- Train students to interpret automated feedback critically.

Addressing these challenges isn’t just about fixing what’s broken—it’s about raising the overall quality of assessments so they genuinely support learning. That means designing them with intention, aligning them with clear objectives, and making sure they’re fair, reliable, and useful for both teachers and students.

Let’s look at the core qualities that make an educational assessment “good” and worth the time and effort it takes to create and administer.

FREE. All Features. FOREVER!

Try our Forever FREE account with all premium features!

What Makes an Educational Assessment “Good”

A good educational assessment doesn’t just measure learning—it improves it. Over the years, I’ve seen well-designed assessments transform classrooms, training rooms, and even entire programs.

The difference comes down to three core qualities: alignment, validity, and reliability. Get these right, and your assessments become not just tests, but tools for growth.

1. Alignment with Learning Objectives

An assessment should be a direct reflection of what you set out to teach. If your goal is to develop problem-solving skills, but your test only checks for memorized facts, you’re sending mixed signals to learners.

How to Get It Right:

- Map each question or task to a specific learning objective.

- Review the assessment to ensure all objectives are covered and weighted appropriately.

- Avoid adding questions “just because they’re interesting” if they don’t connect to your goals.

2. Validity – Measuring What You Intended to Measure

A valid educational assessment captures the skill or knowledge it’s meant to assess—nothing more, nothing less. For example, if you want to test historical analysis skills, don’t rely solely on multiple-choice recall questions.

How to Get It Right:

- Choose formats that match the skill (e.g., essays for critical thinking, simulations for technical skills).

- Pilot your assessment with a small group to ensure questions work as intended.

- Ask a colleague to review for alignment and clarity.

3. Reliability – Producing Consistent Results

Reliability means that if a learner took the same assessment twice under the same conditions, the results would be similar. Inconsistent assessments create confusion and undermine trust.

How to Get It Right:

- Use clear instructions and standardized scoring rubrics.

- Train graders or reviewers to ensure consistency.

- Eliminate ambiguous questions that could be interpreted in multiple ways.

When these three qualities come together, assessments give students confidence that what’s being measured is fair, relevant, and meaningful, and they give educators clear, actionable data to improve teaching.

How to Leverage Technology for Educational Assessments

Technology has transformed assessments from static, paper-based events into interactive, data-rich experiences. Done right, it saves time, improves engagement, and gives you deeper insights.

1. Automation and Instant Feedback

Online tools can auto-grade quizzes, deliver instant results, and even provide tailored feedback. This saves educators time and gives learners immediate direction.

How to Use It Effectively:

- Customize automated feedback to be specific and actionable, not generic

- Pair automation with occasional manual review to maintain quality.

- Use analytics to spot performance trends and adjust teaching strategies.

2. Security and Cheating Prevention

Features like browser lockdown, secure proctoring, and copy-paste blocking help maintain fairness in online educational assessments.

How to Use It Effectively:

- Balance security with learner comfort—overly strict controls can increase anxiety.

- Be transparent about the security measures in place.

- Include question types and applied tasks that are naturally harder to cheat on.

3. Integration with Learning Systems

Assessment tools work best when they connect seamlessly to your LMS, content library, and reporting systems.

How to Use It Effectively:

- Choose tools that support single sign-on, API integration, and data exports.

- Merge assessment results with other learner metrics for a fuller picture.

- Automate result-sharing with learners and stakeholders.

4. AI-Powered Adaptive Testing

Adaptive technology adjusts question difficulty in real time based on learner responses, creating a personalized experience that challenges advanced learners while supporting those who need more help.

How to Use It Effectively:

- Explain to learners how adaptive testing works to build trust.

- Combine adaptive methods with other assessments for fairness and transparency.

- Review algorithm results periodically to ensure accuracy and alignment with your objectives.

Best Practices for Creating Effective Educational Assessments

We’ve covered a lot of ground—now let’s pull it all together into the core best practices for creating assessments that actually work.

- Align Every Item With Learning Objectives

Make sure each question or task connects directly to what you set out to teach. Avoid adding content that isn’t tied to your goals—it confuses learners and dilutes the results.

- Use a Balanced Mix of Assessment Types

Combine diagnostic, formative, summative, and authentic methods to get a complete picture of learning. Relying on one type alone can give you a narrow or misleading view.

- Keep Assessments Fair and Accessible

Check for cultural or language bias, ensure clarity, and test usability across devices. Provide accommodations for different learning needs so everyone has a fair shot at success.

- Give Clear, Actionable Feedback

Go beyond grades—tell learners what they did well, where they need improvement, and how to get there. Build feedback into the learning process, not just at the end.

- Leverage Technology Thoughtfully

Use tools for automation, security, integration, and adaptive testing to save time and improve accuracy—but always keep human oversight in the loop for quality control.

- Review and Refine Regularly

Analyze results to see which questions worked, which didn’t, and why. Use that insight to improve future assessments.

Watch: How to Review Quiz Reports & Statistics

Follow these principles, and your assessments will not only measure learning—they’ll actively contribute to it.

How to Create an Online Educational Assessment Quiz

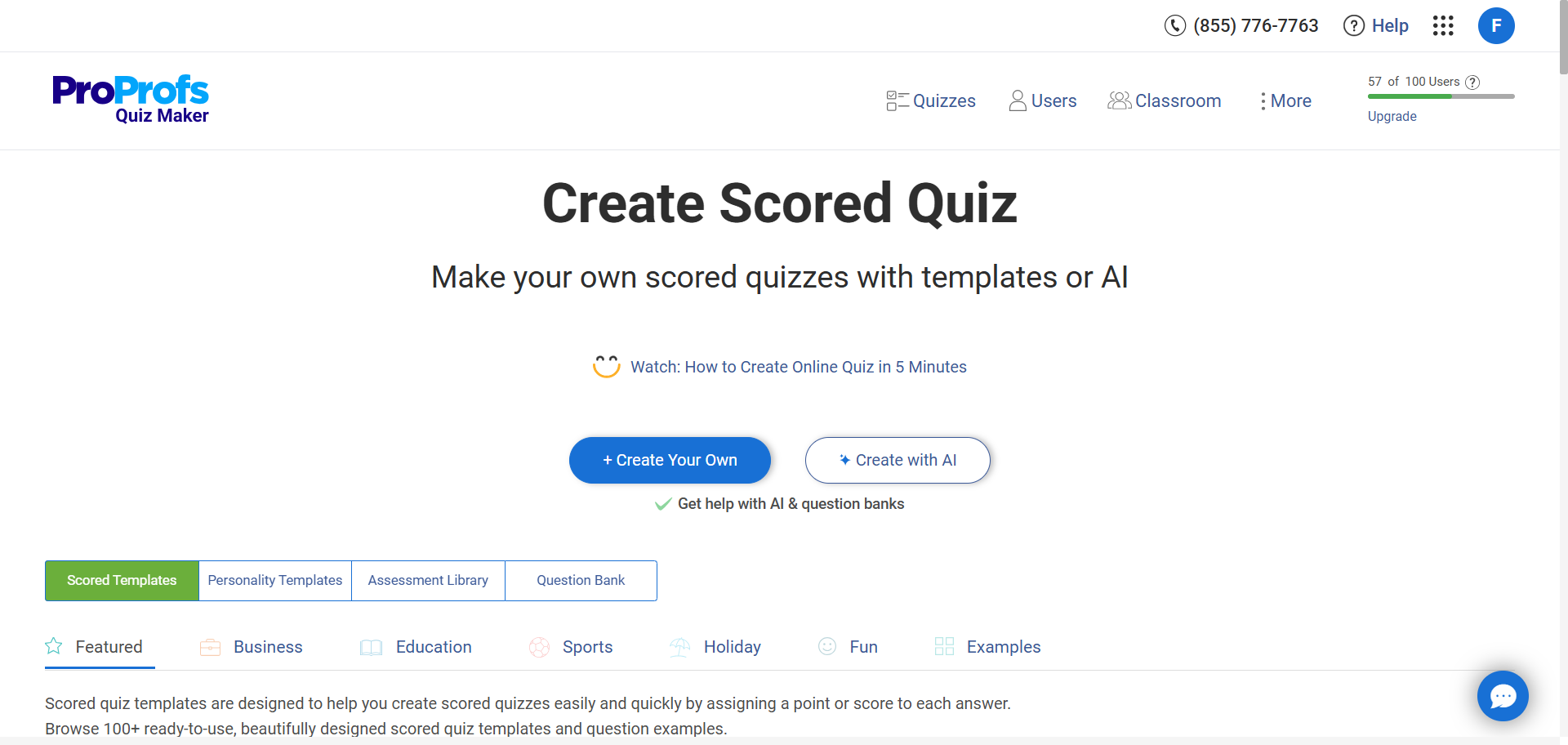

You don’t need to be a tech expert to create an engaging online test. With the right tool, you can go from idea to published quiz in minutes. Here’s a step-by-step guide using ProProfs Quiz Maker as an example.

Step 1: Choose Your Creation Method

Decide how you want to start:

- From scratch – Build your quiz entirely your way.

- From templates – Choose from thousands of ready-to-use quizzes.

- With AI – Let AI generate tailored questions based on your topic, difficulty, and learning goals.

If You Choose AI:

- Click Create with AI.

- Enter your topic, number of questions, difficulty, and whether to include explanations or outcomes.

- (Optional) Upload content—like a document, image, video, or webpage link—for AI to create questions from.

- Click Generate and review the results, selecting or regenerating as needed.

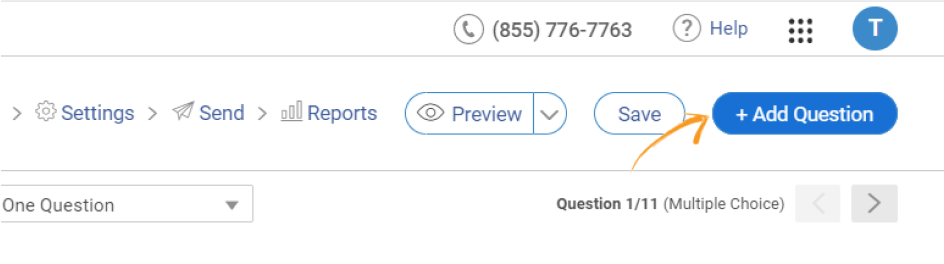

Step 2: Add Questions

You can:

- Manually create from 20+ interactive question types (drag-and-drop, hotspot, video response, etc.).

- Use the question bank with over a million ready-made questions.

- Import in bulk from Excel or Word.

- Use AI to quickly generate questions from your topic.

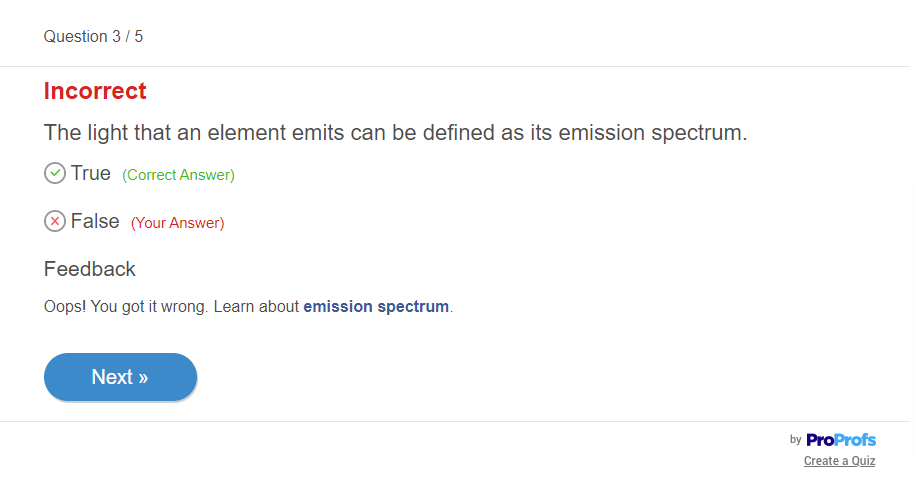

Step 3: Automate Grading

In the Settings tab, choose:

- Regular grading – Full points only if all correct answers are chosen.

- Partial grading – Award points for partially correct responses.

- Custom grading – Assign different weights to different answers.

You can also:

- Enable negative marking to discourage guessing.

- Award bonus points for conditions like early completion.

- Add feedback for correct, incorrect, or individual answer choices so students learn instantly.

Step 4: Configure Settings

Fine-tune how your quiz works:

- Time limits – For the whole quiz or per question.

- Security – Randomize questions, shuffle answer options, disable tab-switching, or enable proctoring.

- Access control – Add password protection or restrict to specific groups.

- Availability – Set start and end dates.

- Notifications – Get alerts when quizzes are submitted and let students know when results are ready.

Step 5: Share Your Quiz

Distribute your quiz your way:

- Email invitations.

- Direct link for quick access.

- Embed it on your website or blog.

- Virtual Classroom – Assign quizzes to groups, track progress, and centralize all quiz management in one place.

Watch: How to Create a Virtual Classroom

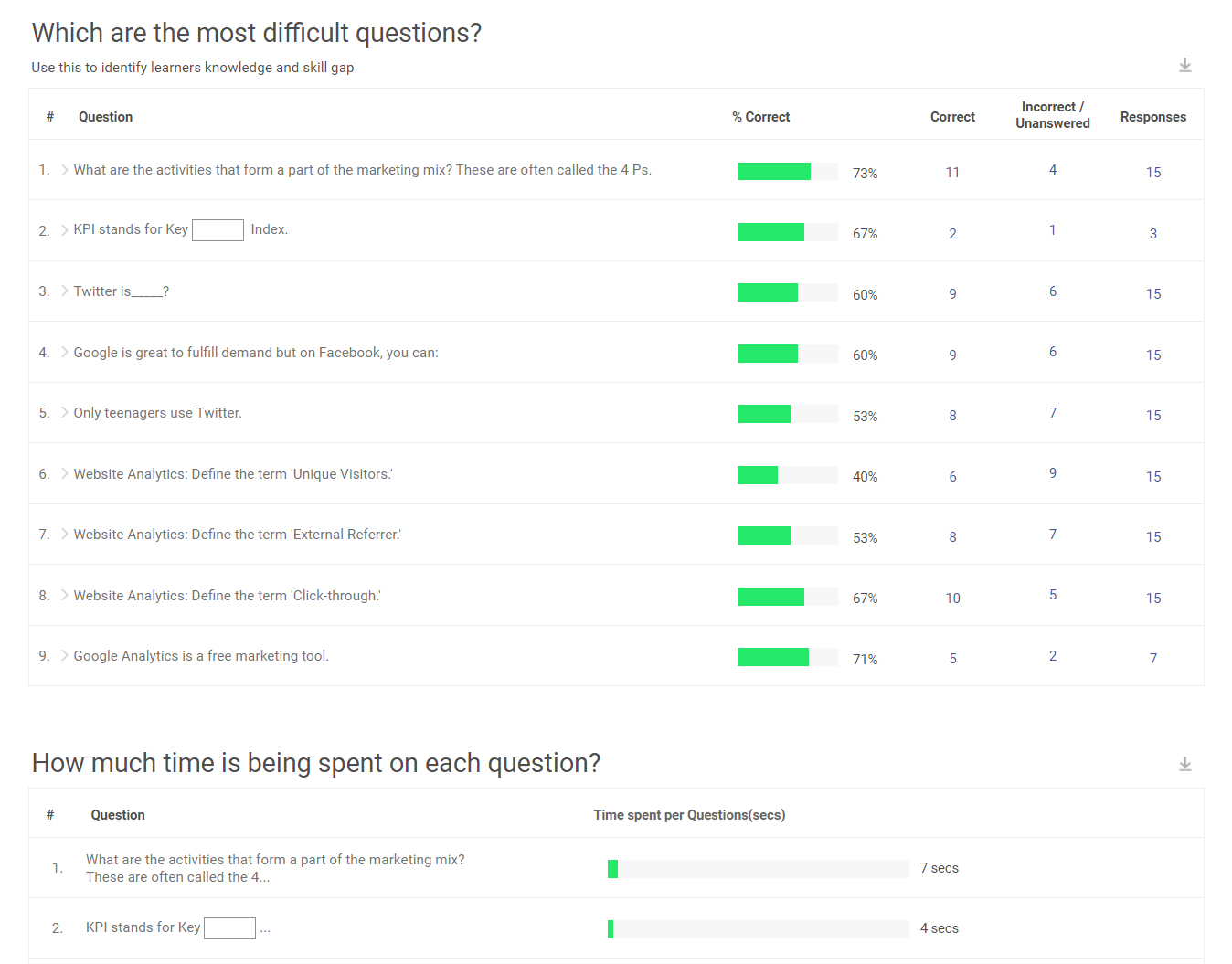

Step 6: Review Results

Once responses are in, use reporting tools to:

- View individual performance (scores, answers, completion time).

- Compare group or cohort results to spot trends.

- Identify knowledge gaps from frequently missed questions.

- Export reports in PDF or Excel to share or store.

FREE. All Features. FOREVER!

Try our Forever FREE account with all premium features!

How to Measure & Ensure Assessment Effectiveness

Creating an assessment is only the first step. The real question is—does it work? The best assessments do more than generate scores; they give you clear, actionable insights into learner performance and help you refine your teaching or training.

Here’s how to evaluate whether your educational assessment is hitting the mark:

- Dive Deep into Performance Data

Look beyond overall scores. Which questions did most learners get wrong? Which ones were too easy? This pattern analysis shows whether your assessment is measuring what matters or if it’s missing the mark.

- Gather Learner Feedback

Ask students how fair, relevant, and clear they found the assessment. Were the questions a good reflection of what was taught? Did the feedback help them improve? Their answers will reveal gaps you might miss from scores alone.

- Review Question Quality

Analyze each item for clarity, difficulty, and alignment with learning objectives. Remove or rewrite confusing questions, overly tricky ones, or those that don’t connect to your course content.

- Cross-Check with Other Indicators

Compare assessment results with other performance data—class participation, assignments, project outcomes. If the trends match, you’re likely measuring accurately.

- Turn Insights into Action

Use your findings to adapt instruction, add targeted practice, or adjust your grading criteria. The most effective educational assessments lead directly to improvements in teaching and learning.

When you treat your assessments as living tools—constantly reviewed, refined, and improved—they stop being just checkpoints and become powerful drivers of progress.

Boost Teaching Outcomes With Better Assessments

Strong educational assessments do more than record a score—they influence how students think, engage, and retain knowledge long after the test is over. By weaving assessment into the learning process, you create moments for reflection, motivation, and course correction.

The most impactful assessments aren’t simply added at the end; they’re designed from the start to guide decisions, personalize instruction, and make progress visible for every learner.

ProProfs Quiz Maker gives you the tools to make this vision practical. From AI-generated questions to interactive formats and automated feedback, it streamlines the entire process without sacrificing quality or creativity.

You can adapt it for quick pulse checks, in-depth evaluations, or personalized learning paths, ensuring every assessment you give is purposeful, engaging, and geared toward real improvement.

Frequently Asked Questions

What are the three main kinds of educational assessment?

The three main kinds are diagnostic, formative, and summative. Diagnostic assessments identify prior knowledge before teaching. Formative assessments monitor learning during instruction. Summative assessments measure achievement at the end of a course or unit. Together, they offer a complete view of student progress and learning effectiveness.

What is an example of an educational assessment?

Examples include standardized tests, online quizzes with instant feedback, portfolios showing long-term work, and project-based tasks applying skills to real situations. Each format serves a unique purpose, from measuring mastery to evaluating skill application, and helps educators better understand and support student learning.

What makes an educational assessment fair and reliable?

A fair educational assessment aligns with objectives, uses clear instructions, and avoids bias. Reliability means it produces consistent results under the same conditions. These qualities ensure accuracy, build trust, and help educators make valid decisions about student performance and areas needing improvement.

How can technology improve the educational assessment process?

Technology improves assessments with automated grading, AI-generated quizzes, instant feedback, secure proctoring, and analytics. These tools save time, scale easily, and give educators actionable data to personalize instruction and make assessments more engaging, efficient, and effective for both teachers and learners.

How can I make educational assessments more engaging for students?

Engage students by mixing question types, adding multimedia, and providing quick feedback. Use gamification, real-world scenarios, and online quizzes to make learning interactive. Offering choice in how students demonstrate knowledge boosts motivation and creates a more meaningful assessment experience.

We'd love your feedback!

We'd love your feedback! Thanks for your feedback!

Thanks for your feedback!